AI Powered Detection Computer Vision

Edge-Based Smart Aid for the Visually Impaired

The Edge-based Smart Assistance System is an innovative AI-powered project developed to support the independent mobility and safety of visually impaired individuals. Leveraging the power of Computer Vision, Edge Computing, and Voice AI, the system acts as a real-time intelligent assistant that helps users navigate their surroundings safely and confidently.

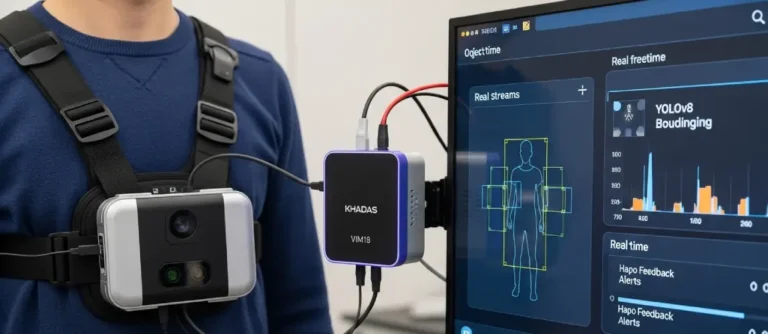

This solution was developed using YOLOv8 object detection (via Roboflow) on a compact yet powerful Khadas VIM1S edge device—providing ultra-low latency performance without the need for cloud connectivity. From obstacle detection to real-time feedback, the system is designed to empower users with situational awareness in an efficient, intuitive, and wearable form.

Room Summary :

The goal was to build a portable, smart, and energy-efficient device that could serve as a digital companion for visually impaired people. Through AI-driven guidance and multisensory feedback, the device enables users to make informed decisions while walking in unfamiliar environments.

Core Technologies Used :

YOLOv8 for real-time object detection

Roboflow for dataset training and deployment

Khadas VIM1S (Edge Device) for local inference

AI Voice Interface for directional and object guidance

Ultrasonic sensors & Vibratory Motor for haptic feedback

Weather API Integration for real-time environmental updates

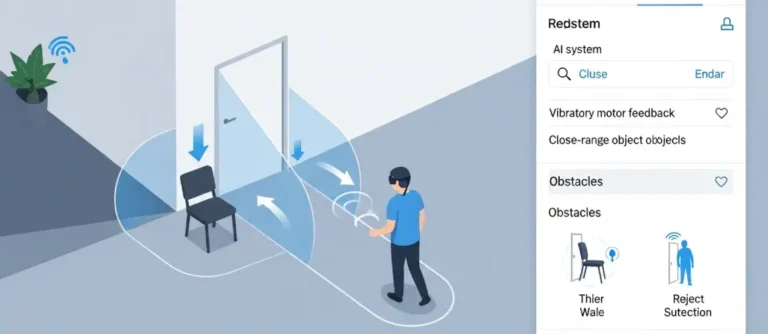

- Real-Time Object & Obstacle Detection – Alerts users about nearby objects (walls, people, vehicles, etc.) using trained computer vision models.

- Voice Command Interface – Users can interact naturally with the device through voice for directional prompts and status updates.

- Haptic Feedback System – Vibration motors provide intuitive alerts about danger zones or obstructions.

- Weather & Environment Awareness – System informs users about current weather conditions to help plan safer movement.

- Offline Edge Computing – All operations run locally on the edge device, ensuring low latency, data privacy, and uninterrupted performance.

- Wearable & Lightweight Design – Designed for portability, comfort, and daily use without dependency on the internet.

Purpose & Impact :

This platform was developed to enhance independence, confidence, and safety for individuals with visual impairments. By combining smart technologies with practical design, the system not only provides real-time assistance but also promotes digital inclusivity and accessibility.

“It’s not just a tool—it’s a digital companion that walks with you, hears for you, and sees for you.”

Target Users

Visually impaired individuals.

Elderly people with navigation difficulties.

Healthcare organizations and NGOs focused on accessibility.

Development Stack

Object Detection: YOLOv8 + Roboflow

Hardware: Khadas VIM1S, Ultrasonic Sensor, Vibration Motor

Programming: Python, OpenCV, TensorFlow Lite

Voice AI: Custom voice command module

Weather: OpenWeather API Integration

Project Timeline

Research & Dataset Preparation: 1 Week.

Model Training & Optimization: 2 Weeks.

Hardware Integration & Testing: 1 Week.

Final Deployment & User Trials: 1 Week

Download Project File

Let’s Build Something Great Together

Ready to transform your idea into a powerful digital solution?

Why Choose GenixStack Solutions?

Partner with GenixStack Solutions to bring your vision to life with expert development, design, and innovation.

We’re just one message away from turning your next big project into reality.